Mastering Recursion Algorithms

A comprehensive guide on various strings operations and techniques used in algorithm design, particularly for solving problems efficiently - recursion - pascal triangle -

Intro.

A recurrence relation is a formula that defines each term of a sequence using the previous terms. It's like a rule that tells you how to get the next number in the sequence based on the numbers you already have.

For example, consider a sequence where each number is twice the previous number, starting with 1. The recurrence relation is:

- First term: a(1) = 1

- Following terms: a(n) = 2 * a(n-1)

So, the sequence starts with 1, then 2 (2*1), then 4 (2*2), then 8 (2*4), and so on.

Tracing a recursive function

involves following each step and call of the function to understand how it processes input and reaches its result.

For example, consider a simple recursive function that calculates the factorial of a number:

```python

def factorial(n):

if n == 0:

return 1

else:

return n * factorial(n-1)

```

To trace `factorial(3)`, follow these steps:

1. `factorial(3)` calls `factorial(2)`

- Needs to calculate `3 * factorial(2)`

2. `factorial(2)` calls `factorial(1)`

- Needs to calculate `2 * factorial(1)`

3. `factorial(1)` calls `factorial(0)`

- Needs to calculate `1 * factorial(0)`

4. `factorial(0)` returns `1` (base case)

- So, `factorial(1)` becomes `1 * 1 = 1`

- Then, `factorial(2)` becomes `2 * 1 = 2`

- Finally, `factorial(3)` becomes `3 * 2 = 6`

Thus, `factorial(3)` returns `6`.

Recursion is a programming technique where a function calls itself in order to solve a problem. It's based on the principle of solving a complex problem by breaking it down into simpler instances of the same problem.

Key Concepts of Recursion

1. **Base Case**: This is the condition under which the recursion stops. It's crucial to avoid infinite recursive calls.

2. **Recursive Case**: The part of the function where it calls itself, each time with a simpler or smaller version of the original problem.

How Recursion Works

Recursion involves two phases:

- **Winding Phase**: The function keeps calling itself and the calls are stacked up until it reaches the base case.

- **Unwinding Phase**: Once the base case is reached, the stack starts to unwind and the function starts returning values.

Recursive Function and Stack Memory

Each recursive call gets its own separate space in the system's stack memory. This space holds the function's local variables and parameters. When a recursive call reaches the base case and returns, its stack frame is popped off the stack.

Example in Python: Counting Function Calls

In Python, we can use a default argument in the function definition to keep track of the count. This default argument will act similarly to a static variable.

def recursive_function(count=0):

# Increment the count

count += 1

# Base case to stop the recursion

if count == 5:

print(f"Function called {count} times.")

return

# Recursive call

recursive_function(count)

recursive_function()

'Function called 5 times.'Explanation

Each time

recursive_functionis called, it receives thecountparameter. If not provided,countdefaults to 0.On each call,

countis incremented by 1.The base case is set such that when

countreaches 5, the function prints the number of times it was called and then returns, stopping further recursive calls.If the base case is not met, the function calls itself, passing the incremented

countvalue.

Recursion vs Loops

While recursion can often be replaced with loops (iterative solutions), it offers a more elegant and straightforward approach for certain problems, especially those that naturally fit the recursive model like tree traversals, factorial calculations, and solving puzzles like the Tower of Hanoi.

### Key Points to Remember

1. **Infinite Recursion**: Always ensure there's a base case to avoid infinite recursion.

2. **Stack Overflow**: Recursive calls consume stack space, so excessive recursion can lead to a stack overflow error.

3. **Performance Considerations**: Sometimes, iterative solutions are more efficient than recursive ones, especially for problems with a large number of recursive calls.

The time complexity for both approaches is the same. However, the space complexity can differ. Without tail call optimization (TCO), tail recursion uses O(n) space, while with TCO and for loops, it uses constant space, O(1). It's worth noting that in practice, many modern compilers implement TCO for tail recursion, making the space complexity effectively O(1) as well. This is why tail recursion is often as efficient as iteration from a space standpoint when TCO is utilized.

Let's dive into each of these types of recursion:

1. Tail Recursion

In tail recursion, the recursive call is the last thing executed by the function. There is no need to keep record of the previous state since the current function's task is complete when the recursive call starts. Tail recursion is often more memory-efficient because it can be optimized by the compiler to a simple loop.

2. Head Recursion

Head recursion is when the recursive call is the first thing executed in the function. The processing happens after the recursive call, and not before it. In head recursion, the recursive call happens first, and then any calculation (if necessary) occurs after the call.

3. Tree Recursion

Tree recursion occurs when a function makes multiple recursive calls. It’s called tree recursion because it branches out like a tree.

Example:

def tree_recursive_fibonacci(n):

if n <= 1:

return n

else:

return tree_recursive_fibonacci(n-1) + tree_recursive_fibonacci(n-2)

4. Indirect Recursion

Indirect recursion involves a cycle of function calls. In this case, a function calls another function, which in turn calls the first function.

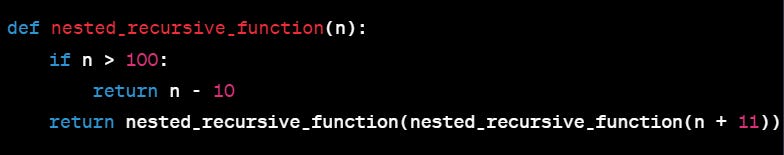

5. Nested Recursion

In nested recursion, the recursive call is made to a parameter that is itself a recursive call.

Tail recursion is often preferred for its efficiency, while tree recursion is useful for problems that naturally branch out, like the Fibonacci sequence or binary tree traversals. Indirect recursion can be seen in mutually recursive structures, and nested recursion, although less common, can be used for certain mathematical functions.

Memoization

Memoization is an optimization technique used primarily to speed up computer programs by storing the results of expensive function calls and returning the cached result when the same inputs occur again. It is a form of caching where the return values of a function are stored in a data structure (like a hash map or array) so that future calls with the same arguments can return instantly without having to redo the computation.

In the context of the Fibonacci sequence example provided, memoization can significantly improve performance. Computing the Fibonacci sequence recursively without memoization results in a time complexity of O(2^n) due to the exponential growth of function calls. However, with memoization, each unique function call result is stored the first time it is calculated, so subsequent calls with the same arguments can return the stored result immediately.

Here's a breakdown of how memoization changes the process:

1. `fib(5)` will call `fib(4)` and `fib(3)`.

2. `fib(4)` will call `fib(3)` and `fib(2)`.

3. Without memoization, `fib(3)` will be calculated twice independently.

4. With memoization, the first calculated value of `fib(3)` is stored.

5. When `fib(3)` is called the second time, the stored value is returned without a need for recalculation.

By using memoization, the time complexity is reduced to O(n) because each of the `n` Fibonacci numbers from `0` to `n` is computed exactly once. The space complexity is also O(n) to store the memoization cache for `n` numbers.

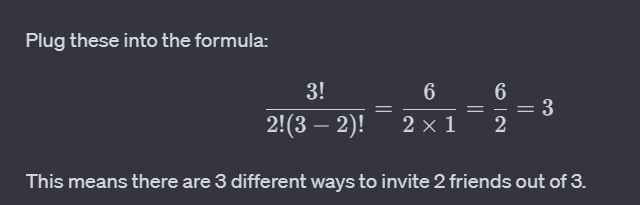

Combination Formula:

Imagine you have 3 friends: Alice, Bob, and Charlie. You want to invite 2 of them to a movie. How many different pairs of friends can you invite?

Here, you are choosing 2 friends out of 3. The combination formula helps you find out how many different pairs you can form. The formula is:

So, you calculate it like this:

3!=3×2×1=63!=3×2×1=6 (3 factorial is the product of all positive integers up to 3)2!=2×1=22!=2×1=2 (2 factorial)(3−2)!=1!=1(3−2)!=1!=1

These combinations are:

Alice and Bob

Alice and Charlie

Bob and Charlie

So, you have 3 different pairs of friends you can invite to the movie.

Let us use Recursion now:

Pascal's Triangle is a useful way to visualize combinations. Each row of Pascal's Triangle represents the coefficients for the binomial expansion and these coefficients are actually the values of combinations. Let's use Pascal's Triangle to find the number of ways to choose 2 items from 4 (which is

C(4,2).

Pascal's Triangle is a triangular array of numbers where each number is the sum of the two numbers directly above it. You can use it to find out how many ways you can choose a certain number of items from a larger group.

Here's a part of Pascal's Triangle:

Example: Let's find the number of ways to choose 2 items from 4, which is C(4, 2).

Find row 4 in Pascal's Triangle (because we're choosing from 4 items).

Count the positions in the row starting from 0. We want the 2nd position (because we're choosing 2 items).

The number at the 2nd position in row 4 is 6.

So,

C(4, 2)is 6. This means there are 6 different ways to choose 2 items from a set of 4.

In Pascal's Triangle, the relationship between the row number, column number, and the combination numbers (n and k) in C(n,k) is straightforward,

Each row represents the different ways you can choose items from a set of size

n.

The column number in a row corresponds to

kin C(n,k). It represents the number of items you are choosing.

What about recursion ?

In Pascal's Triangle, each number (except for the 1s at the edges) is the sum of the two numbers directly above it. This is exactly what the recursion in the function does.

For instance, consider C(4, 2). According to the function, this would be calculated as:

C(4, 2) = C(3, 1) + C(3, 2)

Now, look at the 3rd row in Pascal's Triangle (remember, we start counting rows from 0):

Row 3: 1 3 3 1k == 0: This condition checks ifkis 0. In the world of combinations, C(n,0) is always 1, because there is exactly one way to choose zero items fromnitems (which is to choose nothing). It doesn't matter how many items you have; if you choose none of them, there's only one way to do it.k == n: This condition checks ifkis equal ton. In combinations, C(n,n) is also always 1. This is because there's exactly one way to choose allnitems from a set ofnitems (which is to choose all of them).

Essential questions

Resources

https://www.techinterviewhandbook.org/algorithms/recursion/

https://recursion.vercel.app/