Tools in LangGraph

The tool in LangChain links a Python function with a schema, defining its name, description, and arguments. This schema is passed to chat models allowing them to to understand and execute them

In the context of LangChain tools, the term “schema” has a very precise meaning. First let define some terms then explain:

Tool: A Python function you want the model to use.

Schema: A structured description of the tool: its name, description, arguments, and optionally return type.

Purpose: Allows the model to call the function safely and correctly.

Decorator (

@tool): Automates schema creation and supports customization, hidden arguments, and artifact returns.

1. What is a schema in this context?

A schema is essentially a structured description of a tool (or function) that tells the model:

The tool’s name: What this function is called.

The tool’s description: What this function does and why it exists.

The expected arguments: What inputs the function needs, including types, optionality, and constraints.

Think of the schema as a contract between the tool and the model. It says:

“Here’s how you can call me, what I do, and what I need from you.”

2. Why do we need a schema?

When using chat models that support tool calling:

The model doesn’t know Python code.

But it can read the schema and understand:

“Oh, there is a tool called

get_weather.”“It expects a

cityargument as a string.”“I can call it to get the weather.”

Without a schema, the model cannot safely or correctly call your function.

3. Creating Tools

LangChain makes this easy via the @tool decorator. For example:

from langchain.tools import tool

@tool

def add_numbers(a: int, b: int) -> int:

"""Adds two numbers together and returns the result."""

return a + b

Use the tool directly

Once you have defined a tool, you can use it directly by calling the function. For example, to use the multiply tool defined above:

multiply.invoke({"a": 2, "b": 3})This schema is automatically inferred from the function name, type hints, and docstring. You can also customize it manually if you want.

Key features of schemas

Automatic inference

LangChain reads the function signature and docstring to build the schema.

Example: It knows

aandbare integers, required by the function.

Return types / artifacts

Some tools return more than simple text. For example:

Images

DataFrames

JSON objects

The schema can indicate this so the model knows how to handle the output.

Injected / hidden arguments

Sometimes a tool has internal parameters you don’t want the model to see.

Example: API keys, configuration flags.

These are injected when the tool is called, not exposed in the schema.

Suppose you have a tool that fetches weather data from an API. You don’t want the model to know your API key—that’s sensitive information.

from langchain.tools import tool# This is your API key (sensitive)API_KEY = "my_secret_api_key" @tool def get_weather(city: str, api_key: str = API_KEY) -> str: """ Returns the current weather for a given city. The API key is used internally and hidden from the model. """ # Simulate API call return f"Weather in {city} is sunny (using API key: {api_key})"

Injected arguments allow tools to have internal configurations, credentials, or extra data without exposing them to the model.

The model only interacts with the arguments it needs.

Why the schema matters for models

Without a schema, the chat model cannot:

Understand what tools exist.

Know what inputs to provide.

Call tools safely with correct arguments.

With a schema:

The model sees a structured “tool API”.

It can automatically decide which tool to call and with what inputs.

It reduces errors and misunderstandings when tools are invoked.

Tool interface in LangChain

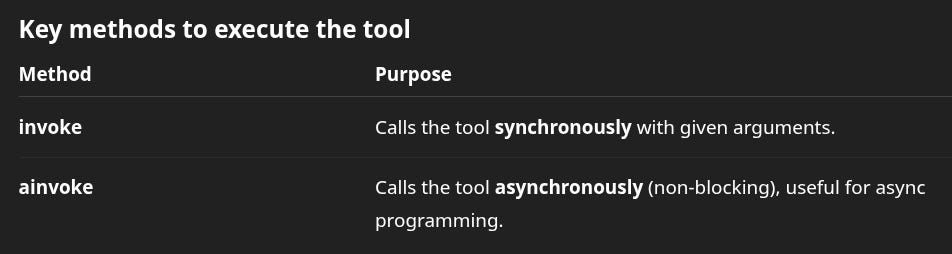

In LangChain, every tool has a standard interface—this is defined in the BaseTool class.

BaseToolis like a blueprint for all tools.It makes sure every tool has the same structure and can be called in the same way by models or workflows.

Think of it as the “contract” a tool must follow.

result = my_tool.invoke(city="Cairo") # synchronous result =awaitmy_tool.ainvoke(city="Cairo") # asynchronous

Absolutely! Let’s make this beginner-friendly.

Tool artifacts:

When you create a tool in LangChain, its main purpose is to return something to the model, usually a message or data the model can use.

But sometimes, a tool produces extra information that the model doesn’t need to see directly, but you want to keep for later.

This extra information is called a tool artifact.

Examples of artifacts:

A dataframe with hundreds of rows.

An image generated by the tool.

A custom Python object (e.g., a processed dataset).

You don’t want the model to receive the raw artifact, because it might be too big, complicated, or irrelevant for the model. But you want to access it later in your chain or other tools.

2. How to return both a message and an artifact

LangChain provides a special response format:

from langchain.tools import tool

from typing import Tuple, Any

@tool(response_format="content_and_artifact")

def some_tool() -> Tuple[str, Any]:

"""Tool that does something and produces an artifact."""

some_artifact = {"data": [1, 2, 3]} # could be dataframe, image, etc.

return "Message for chat model", some_artifact

Key idea

In LangChain, when you use:

@tool(response_format="content_and_artifact")

The tool returns a tuple:

return content_for_model, hidden_artifact

content_for_model→ what the model sees.hidden_artifact→ what the model does NOT see, but you can use in your Python code or in downstream tools.

The response_format="content_and_artifact" tells LangChain:

“Only give the first item of the tuple to the model. Keep the second item for internal use.”

Why is this useful?

Keeps the model focused on relevant information.

Lets downstream components access rich data.

Works well for tools that produce more than just text, like images, plots, or datasets.

Think of it like this:

You’re showing the model the summary, but keeping the full report for yourself or later tools.

Example usage in a workflow

# Tool returns both message and artifact

message, artifact = some_tool()

print(message) # Model sees this

print(artifact) # You or another tool can use this

Output:

Message for chat model

{'data': [1, 2, 3]}

This means that tool output can have two parts:

Content → visible to the model

Artifact → hidden, for later use

This is essential when building multi-step pipelines or agents where the model doesn’t need every detail.

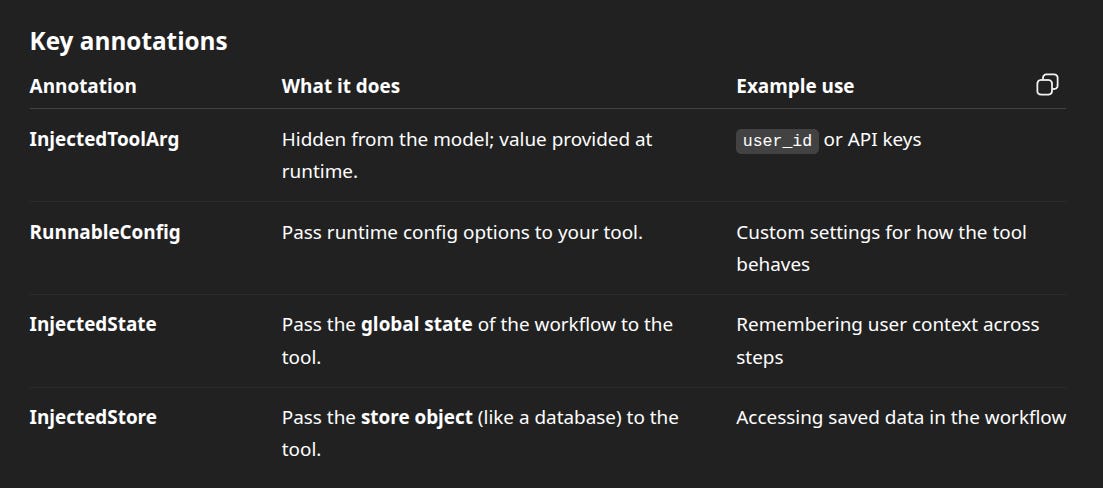

Special type annotations in LangChain tools

When you define a tool in LangChain, you usually create a Python function. But sometimes, some arguments:

Should not be given by the model

Are used internally

Should be injected at runtime

LangChain gives special type annotations for this. These annotations tell the system:

“Hey, this argument is special. Don’t show it to the model. We’ll fill it in later.”

Example: InjectedToolArg

from langchain_core.tools import tool, InjectedToolArg @tool def user_specific_tool(input_data: str, user_id:InjectedToolArg) -> str: """Processes input for a specific user.""" return f"User {user_id} processed {input_data}"

user_idis hidden from the modelYou provide

user_idwhen calling the tool manually:

user_specific_tool.invoke(input_data="Hello", user_id=123)

Example: RunnableConfig

from langchain_core.runnables import RunnableConfig from langchain_core.tools import tool @tool async def configurable_tool(param: str, config:RunnableConfig): """Use config inside the tool.""" print(config) # you can access runtime settings

The model never sees

configYou provide values at runtime:

await configurable_tool.ainvoke(param="test", config={"settings": {"value": 42}})

Example analogy for Tool artifacts vs injected state:

Tool artifact → like a note you pass to the next tool

Injected state → like a shared notebook for the whole workflow

Best practices for tools

Name tools clearly and write good docstrings

Type hint your arguments → makes it easier for the model to call

Keep tools small and focused

Use tool-calling enabled models to let them pick which tool to use automatically

Special type annotations hide arguments from the model and allow runtime injection.

Tool artifacts are temporary outputs; injected state is persistent across the workflow.

Keep tools small, well-documented, and type-hinted.

Toolkits are groups of tools you can manage together.

Toolkits

A toolkit in LangChain is just a group of related tools bundled together. You can get all tools at once from the toolkit instead of picking them one by one. Makes it easier to manage sets of tools for a task.

Think of it as a toolbox: it has many tools inside, all designed to work for a specific task.

Instead of creating each tool separately, you can get them all at once from the toolkit.

“Knife → tool to cut, Peeler → tool to peel, Grater → tool to grate”

# Initialize a toolkit

toolkit = ExampleToolkit(...)

# Get list of tools

tools = toolkit.get_tools()