Understanding Caching Systems: From Basics to Algorithms

Back-end - 0x01. Caching - deque -stack - OrderedDict

Intro

Caching reduces the time it takes to retrieve data by storing frequently accessed information in faster storage layers. This significantly decreases the latency for data retrieval, leading to faster response times for user requests.

Caching requires additional memory and storage resources. Efficiently managing these resources to balance between cache size, performance gains, and costs is a critical aspect of caching strategy.

What is a Caching System?

A caching system stores temporary data so that future requests for that data can be served faster. The data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere.

Python Example: Basic Cache Implementation

What Does FIFO Mean?

FIFO stands for First-In, First-Out. It is a caching algorithm where the first block that comes in is the first one to be replaced when the cache is full.

Scenario: News Website with Daily Updates

Using the FIFO caching system, the cache will be filled with the day's top stories as they are published. Let's say your cache can only hold 10 articles at a time due to memory constraints. When the 11th story is published, it will enter the cache, and the very first story that was cached

To implement easily we can make use of deque:

Deque (Double Ended Queue) in Python is implemented using the

collectionsmodule. It is preferred over a list in situations where we need quicker append and pop operations from both ends of the container, as deque provides an O(1) time complexity for these operations, as opposed to a list that provides O(n) time complexity.

append(): This method is used to add an element to the right end, popleft(): This method is used to remove an element from the left- pop(): This method is used to remove an element from the rightPython Example: FIFO Cache using deque:

What Does LIFO Mean?

LIFO stands for Last-In, First-Out, similar to a stack. It’s a principle where the last block to be inserted is the first one to be removed when the cache is full.

A common real-world application of LIFO is the 'undo' feature found in many software applications, like text editors or graphic design programs.

Python Example: LIFO Cache (Stack)

OrderedDict:

An OrderedDict is a dictionary subclass in Python that remembers the order in which keys were first inserted. It's part of the collections module.

Here are some unique features of OrderedDict:

It maintains the order of elements based on their insertion order.

If a new entry overwrites an existing entry, then the order of items is left unchanged.

If an entry is deleted and reinserted, then it will be moved to the end of the dictionary.

It supports reverse iteration using

reversed().Equality tests between

OrderedDictobjects are order-sensitive

What Does LRU Mean?

LRU stands for Least Recently Used. It's a caching algorithm that removes the least recently used items first. This algorithm requires keeping track of what was used when, which is expensive if one wants to make sure the algorithm always discards the least recently used item. “least recently accessed”

Use of OrderedDict in Cache:

OrderedDict keeps track of the order in which items are inserted. When an item is accessed, it's moved to the end of the OrderedDict, indicating that it has been used most recently.

The methods

move_to_endandpopitemof theOrderedDictclass in Python'scollectionsmodule are particularly useful for managing the order of items

move_to_end Method

The move_to_end method is used to move an existing key to either end of the OrderedDict. The method signature is as follows:

move_to_end(key, last=True)key: The key within theOrderedDictto move.last: This is a boolean argument. IfTrue(the default), the key is moved to the right end (indicating it was most recently used). IfFalse, the key is moved to the left end.

The popitem method

is used to remove and return a (key, value) pair from the OrderedDict. The method signature is as follows:

popitem(last=True)last: This argument controls which end of the dictionary to pop from. IflastisTrue(the default), the method pops and returns the last(key, value)pair, mimicking a LIFO (Last In, First Out) queue. IflastisFalse, the first(key, value)pair is popped, mimicking a FIFO (First In, First Out) queue.

How LRU Logic thinks?

Access or Update Operations: When an item is accessed (through a get operation) or updated/added (through a put operation), it is considered "used" and thus should be marked as recently used. This is achieved by moving the item to the end of the OrderedDict using the move_to_end method, signifying it as the most recently used item.

for an LRU cache, you should remove the first added item (the least recently used one) by using

popitem(last=False).

popitem(last=False).What LFU Means ?

LFU, or Least Frequently Used, is a cache eviction algorithm that removes the items that are least frequently used when the cache reaches its capacity. Unlike LRU, LFU takes into account how often an item is accessed, not just when it was accessed last.

Python Implementation of LFU Cache

Implementing an LFU cache is more complex than LRU or MRU due to the need to efficiently track access frequencies.

we need to track cache and frequency as example:

Add a key-value pair (1, "A"):

lfu.put(1, "A")vals = {1: "A"}(Key1with value"A"is added)counts = {1: 1}(Key1's access frequency is now 1)lists ={0: [],1: [1]}(Key

1is added to the frequency list for 1 access)minCount = 1(The minimum frequency in the cache is now 1)

Add another key-value pair (2, "B"):

lfu.put(2, "B")vals = {1: "A", 2: "B"}(Key2with value"B"is added)counts = {1: 1, 2: 1}(Both keys1and2have an access frequency of 1)lists = {0: [], 1: [1, 2]}(Key2is added to the frequency list for 1 access)minCount = 1(The minimum frequency is still 1)Accessing a Key

Retrieve the value for key

1:lfu.get(1)valsremains unchangedcounts = {1: 2,2: 1}(Key1's access frequency is incremented to 2)lists = {0: [], 1: [2], 2: [1]}(Key1is moved to the frequency list for 2 accesses)minCount = 1(The minimum frequency is still 1, as key2is accessed only once)

Hints in practice:

Overloading the

__init__method means providing a new implementation for the__init__method in a subclass that overrides the one in its superclass.In Python, when you create a subclass, it inherits all the methods from its superclass. If you want to change the behavior of one of these methods in your subclass, you can provide a new implementation for the method in your subclass. This is called method overriding.

In Python, you can get the number of items in a dictionary using the

len()function.In the context of your code, if you want to get the number of items in the

cache_datadictionary, you can do it like this:num_items = len(self.cache_data)

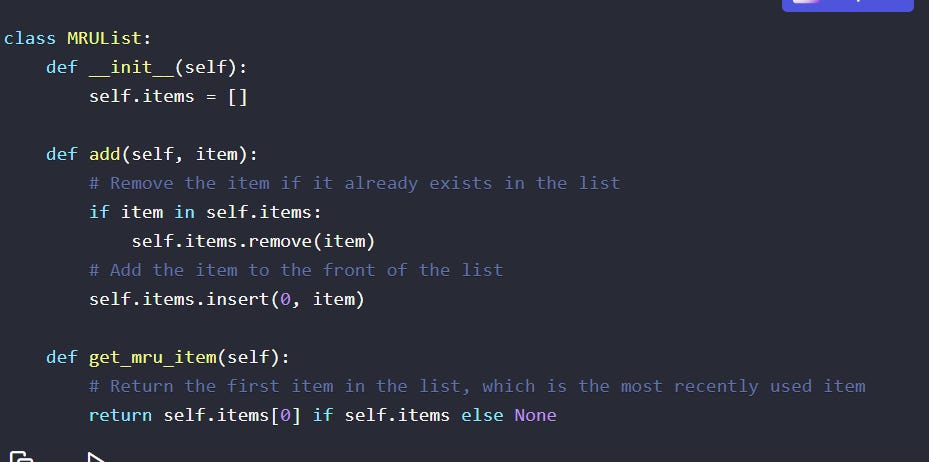

MRU (Most Recently Used)

MRU (Most Recently Used) caching is a type of caching algorithm that prioritizes retaining the most recently accessed items in the cache. It's based on the principle that the items you've used most recently are likely to be used again soon. This is in contrast to other caching strategies like LRU (Least Recently Used).

In an MRU cache, whenever a new item is accessed, it is moved to the top of the cache. If the cache reaches its capacity and needs to make room for new items, the MRU algorithm will discard the item that was most recently added or accessed.

MRU can be useful in specific contexts where the most recent data is less likely to be needed again immediately. For example, in certain types of data streaming or processing scenarios where once the most recent data is processed, it's unlikely to be needed again soon.

In this example, the add method adds an item to the front of the list, and the get_mru_item method returns the first item in the list, which is the most recently used item.

However, this approach is quite simple and may not be efficient for large lists. A more advanced approach uses a combination of a doubly linked list and a hash map to achieve constant time complexity for both adding and accessing the most recently used item.

Resources:

https://www.enjoyalgorithms.com/blog/implement-least-recently-used-cache

This content is updating, stay tuned …